-

General purpose computers continue to grow in power, capacity and complexity. We can safely ignore (or at least delay discussion of many of these developments), but programming does require some sense of what happens when a program executes. Just as economics uses models that are simpler than reality but hopefully capture key aspects of the situation, so for our purposes we are going to discuss a very simple computer.

The model computer we will discussed is named Tim♭The Replacements 1985, a long-forgotten cousin of the also nearly-forgotten Lisa.

Tim also resembles a clone of the IBM AT), circa 1985.

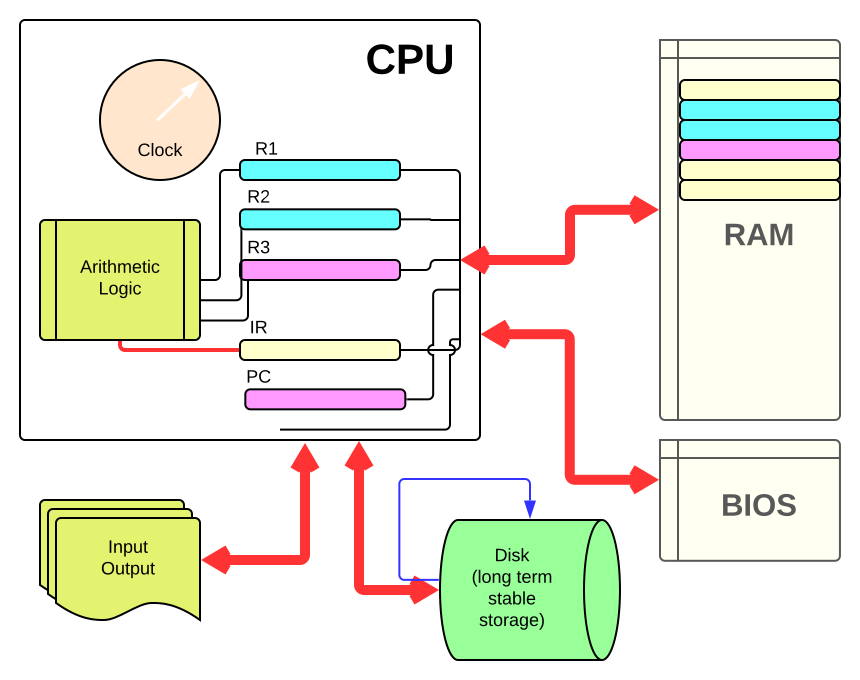

- Pleased to Meet Tim♭The Replacements 1987 The basic design of Tim is shown in Figure 1 which is the kind of stylized picture that a economist programmer might have in mind as they struggle to do computational economics. Tim is a complex electronic circuit made up of semi-conductors, gates that let electrical current flow through the circuit or not depending on the presence (or lack) of other electrical force. One such location that is on/off or 0/1 is a bit or binary digit. The current being on or off (or magnetic charge being positive or negative) at a location depending on other conditions allows the computer to represent logic (truth and falsity) and then to build from this hardware to represent numbers.

- Misty water-colored memories♭The Way We Were, Barbara Streisand 1970s The location of information in RAM or a drive is given by an address. The address is not a number intrinsically: like everything else digital it is simply a sequence of 0s and 1s that will route the circuitry to a physical location. An address refers to a a cell or a word. Physically adjacent memory cells have logically adjacent addresses when interpreted as a number, which is why it is useful to think of addresses as numbers. Add one to an address and you will be directed to the next location (possibly including a jump to the lowest address on the next memory chip). Humans are not good at handling long strings of 0s and 1s, so we convert the patterns into more compact forms. For example, four bits can take on 24 = 16 different values:

01012 = 0×23 + 1×22 + 0×21 + 1×20 = 0 + 1×4 + 0 + 1 =510.

When discussing different bases, the base is given since Binary Decimal Hexadecimal 0000 00 0 0001 01 1 0010 02 2 0011 03 3 0100 04 4 0101 05 5 0110 06 6 0111 07 7 1000 08 8 1001 09 9 1010 10 A 1011 11 B 1100 12 C 1101 13 D 1110 14 E 1111 15 F

- Other Terms

Unit Equals Distinct Values (base 10) Distinct Values (base 16) byte 8 bits 256 FF KB 1 Kilobyte 1024 bytes 4FF bytes MB 1 Megabyte 1,048,576 bytes F FFFF bytes GM 1 Gigabyte 1,073,741,824 bytes 4FFF FFFF bytes

- Some Memory Addresses and Contents on Tim

Address Contents Right Now ------------------------------------------- 069A 0000 FE99 0A32 BD01 069B 0887 D066 BD01 B36E 069C 1944 0000 0000 0033

The CPU processes instructions, which might be "add these two numbers together." Where does it get the instructions and how does it know what the instruction means? The answers since the year 1945 or so, are:

- "from RAM"

- and

- "because Tim's circuits are hard-wired to do so."

That is, Tim treats memory contents as both instructions as well as data. They are instructions because when the bit pattern is copied to the right physical location on Tim's CPU it causes the circuitry to process the bits at various locations in the CPU and RAM. They are data when the bit pattern is copied to physical locations on Tim's CPU with circuity that will interpret them as numbers or other information. The CPU does not have circuity to process arbitrary memory cells directly, for example to add contents of address069BandAE44. This would require direct connections to each memory cell instead of a single bus between RAM and CPU. Instead, the instruction to add memory cell contents together must include instructions to copy the contents to locations in the CPU. Then the contents of these location are added. Locations on the CPU that contain data and addresses to work on are called registers, illustrated by R1 and R2 on Figure 14. There are many registers in a CPU, but only a few are important to help understand programming. Recall a machine instruction is a sequence of 0s and 1s which may have been stored in RAM. The CPU cannot execute instructions directly from RAM just as it cannot directly add two memory cells together. An instruction must be copied from memory to the CPU to be executed, and it must be placed in the IR, the instruction register. The contents of the IR dictate what happens next to the state of Tim's circuitry. How does the CPU 'know' when it is done with the current instruction, and how does it know where to get the next instruction from? As usual, there is nothing but circuitry to provide the answer. In this case the circuity is a clock, which dictates what happens now and what happens next. The CPU cycle is like the parts of our brains that tell our heart to beat and our lungs to breathe. That part of the brain is made up of the same stuff as the part that lets you read and comprehend this sentence. But it must operate independently to impose order. The same is true of the instruction cycle and other cycles on a CPU: they must be semiconductor circuits, but they must be wired to move from one state to the next in very different way than the parts of the CPU that multiply numbers or compute $e^x$. The faster the clock speed, the more instructions can be carried out in the same amount of time, all else constant. As a typical early PC Tim has a 12 MHz clock speed: twelve million cycles per second. However, a single instruction can take several cycles to complete, so the clock speed is not the same as the instructions per second. Microprocessors passed the GHz point by the 2000s, nearly one thousand times the number of beats per second as Tim. With the development of multi-core computers and changes in how instructions work, the actual speed of work has gone up even more. But, like many things, these details do not change the basics required to understand what your program does. The instruction cycle dictates a sequence of actions on Tim. It relies on the instruction register (IR) and the program counter, usually denotedPC

but here called MPC for machine program counter. A basic instruction cycle might look like:Exhibit 12. Tim's Inner Instruction Cycle

Notice that the default says the next instruction is in the next memory address. If this were the only possible cycle it would be nearly impossible to re-program a computer. But the instruction set includes the possibility of resetting the MPC so that the sequence of instructions executed depends on the data. Most readers will understand that programs and applications execute on a computer within the context of an operating system (the OS). The OS is simply a large collection of instructions and data. In the old days operating systems had serious names like Unix, CP/M, AIX, and DOS. Later OS's got names that were more descriptive like Vista or Leopard or Ice Cream Sandwich. The operating system on Tim happens to be called Skyway.- Go to the address currently stored in the MPC and fetch (load) the contents. Put them in the IR.

- If the instruction says to change the MPC (jump to a new address), then reset the MPC and return to 1.

- Execute the instruction in the IR. This may involve reading or writing to RAM, manipulating values in registers, etc.

- Increment the MPC to the next memory cell and repeat.

- Freeze Frame♭J. Geil's Band 1980: Interrupting a running program

As a program, how can Skyway run at the same time as programs written to do economics (or play games or download music)? With multiple CPUs it is possible for the OS to run on some processors while programs and apps run on other processors. But computers seem to work the same with one CPU as with several, except perhaps faster. How does this happen? It is an illusion that the OS operates simultaneously with other programs and applications. An OS like Skyway is a privileged program in many ways, but it must hand over the CPU to other programs for them to do their work. The illusion is sustained because another part of Tim's CPU cycle occasionally interrupts whatever is the current instruction and returns control to some other location in memory. In particular, it would return control to Skyway for a slice of time. Skyway would carry out tasks to keep things running smoothly and when this timeslice expires control can be returned to the user's program for another time slice. - An Analogy to Interrupts An interrupt is like a rich homeowner leaving her condo once a week in order for a maid to come and clean. The maid finishes and leaves. The owner returns. If we were watching through the windows, but only occasionally and never when the maid is working, it would appear that the homeowner is enjoying a clean house without doing anything. By analogy, the special location that the MPC occasionally jumps to will carry out the housekeeping chores that are needed and then relinquish control again to the running program. It may not finish all the chores that week, but it knows that what is not done on this cycle can be done the next time it gets access to the condo. To strain the analogy a little bit towards accuracy: Imagine that instead of making the homeowner leave the house while cleaning the maid could simply freeze the homeowner, like in an episode of Star Trek. When finished the maid unfreezes the homeowner who does not realize that 30 minutes have gone by. The reason this analogy is better is that when two (or more) programs share the CPU on Tim only one is busy at a time. The others are suspended temporarily; they do not have to leave and come back. In fact, on Tim both the homeowner and the housekeeper get interrupted by the system. This way the homeowner is not frozen for hours when the maid has a lot to do. After a while the maid freezes and the homeowner wakes up and continues.

- Store the current MPC in a register and then set it to a special address (start of visit by the maid).

- Carry out the Inner Instruction Cycle as described above for a fixed number of clock cycles.

- Retrieve the saved address and store it in the MPC.

- Carry out the Inner Instruction Cycle for a fixed number of clock cycles.

- Return to 1.

Exhibit 11. Schematic for Tim

floppydisk drives served in the past.

0000, 0001, 0010, 0011, …, 1111. We can convert these patterns into a decimal number by treating each place as a power of 2, just as with decimal numbers:

010110 = 101 not 5.

Each memory address on Tim has a fixed address length. For example early PCs like Tim had 16-bit addresses. This became 32-bit and eventually 64-bit addresses. To think of a string like 0100 1011 1110 0011 as an address is not easy, but it is the same as 1942710, which is not too daunting. However, the number of decimal digits required to present a string of bits is not even, because powers of 10 are not the same as powers of 2. Four bits require 2 decimal digits to code (0 to 15), but that leaves 84 decimal numbers with no purpose, 16 to 99. So, decimal is more compact and easier for our eyes to process than binary, but it is not a good code for summarizing strings of bits that come in powers of 2. A code with 8 or 16 numerals works well, hence the use of hexadecimal. First count 0 to 9, same as decimal, then A for 1010, B = 1110, C = 1210, D = 1310, E = 1410, F = 1510. A single hexadecimal numeral corresponds to four bits, no more no less. A 64-bit address is the same as 16 hexadecimal digits.

Table 4. 4-bits in Different Bases and Other Terms

1B9E.

Since we are just making up addresses and not actually wiring the circuits on Tim, addresses will have one letter to emphasize they are hexadecimal not an ordinary decimal like 9300. Contents of the memory cell 1B9E might look something like 0000 FE99 0A32 BD01. A space will appear every four digits, which means if an address is stored in the memory cell it will appear on its own in our notional memory contents.

Exhibit 13. Tim's Outer Instruction Cycle

Summary

- All electronic computers include processors (general and specialized), random access memory, long-term storage (disks) connected by buses, and input/output devices

- Memory is stored in words that have digital addresses. Hexadecimal notation is convenient for representing binary information stored in a number of place that is a power of 2 (such as 32 or 64 bits).

- The CPU operates on an instruction cycle controlled by an electronic clock. Instructions are stored in memory and are executed when loaded into the instruction register (IR) on the CPU.

- A single action in our minds, like assign

ythe value ofz, requires multiple machine instructions to move memory contents to and from the CPU and manipulate them inside registers. - Slices of time are shared by running applications. Timesharing is possible because the clock (and I/O devices) can interrupt the instruction cycle, forcing a running program to relinquish control of the CPU temporarily.